Your AI Chatbot Is Stuck in the Past—Here’s Why That Matters

A brief guide for how to use AI chatbots for current events.

This issue explores Applied AI, showing how AI works in real projects and the lessons that come from building in the real world.

Imagine chatting with a really knowledgeable friend—someone who has read thousands of books, studied countless topics, and can explain just about anything in a clear, insightful way.

Now, imagine that friend hasn’t read a single new piece of information in over a year. No news, no recent discoveries, no updates.

They’re still sharp, but when it comes to current events? They’re guessing.

That’s exactly what happens when you ask an AI chatbot about something happening right now (well…kind of).

How AI Models Learn—At a High Level

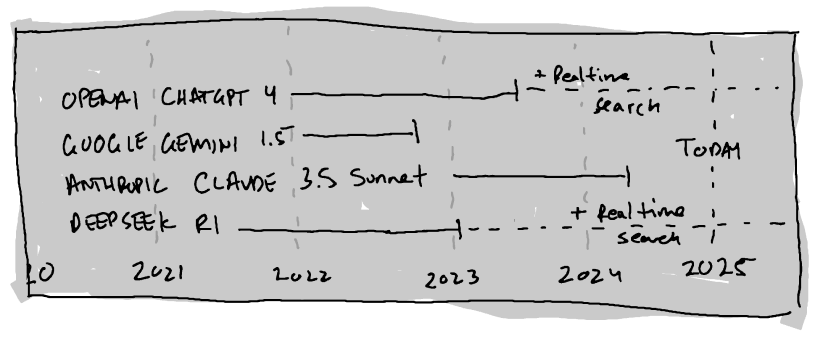

AI models like ChatGPT, Claude, Gemini and Deep Seek don’t “think” or “learn” the way people do.

Instead, they go through a massive training process (called Pre-training) where they’re fed enormous amounts of text—books, articles, websites, scientific papers, and more.

This training happens in batches rather than continuously.

The model learns from what was available at the time of training, and then—once training stops—its knowledge is frozen.

This is what’s called a knowledge cutoff.

So if a model’s last training update was in mid-2024, it has no idea what happened in late 2024 or 2025.

It doesn’t know who won an election (this part’s important, so hold onto it!), what the latest scientific breakthroughs are, or what’s trending on social media.

Can it make predictions based on past patterns? For sure, but that’s not the same as real knowledge.

It’s like asking someone to guess what’s happening in the news based on last year’s headlines.

What makes this even more challenging: most models are designed to both avoid spreading misinformation and keep users satisfied—sometimes leading them to dismiss information as fake while still trying to sound agreeable.

Can AI Models Access the Internet?

Some AI tools (presently ChatGPT & Deep Seek R1) can pull in real-time information—but that doesn’t mean they “learn” from it in the way people assume.

When a model has internet access, it often uses something called retrieval-augmented generation (RAG). Instead of relying only on its built-in knowledge, it searches for information the same way you would—by looking it up.

Think of it like this:

A standard AI chatbot is like a really smart friend with an outdated encyclopedia.

An AI with retrieval capabilities is like that same friend, but now they have access to a real-time search engine and can look things up when needed.

The catch?

Not all AI models have real-time search capabilities.

If they do, the quality of what they find depends on where they’re pulling information from.

They still don’t “learn” from those searches—they just reference them in their responses.

So while some AI tools can fetch current data, it’s still up to you to fact-check and verify sources.

Why AI Might Call Something a Hoax (Even When It’s Not So Simple)

If you ask an AI chatbot whether something is a hoax, you might get a cautious response—even if the truth is more complicated.

That’s because AI models are incentivized to avoid spreading misinformation. If something is widely disputed or lacks authoritative sources, the model may default to saying:

"This claim is false."

"This is considered a hoax."

"There is no evidence to support this."

Why?

Minimizing Harm – AI developers are under pressure to prevent misinformation from spreading, especially on controversial topics like health, politics, or scientific discoveries. If a model gets something wrong in a high-stakes area, it could cause real-world harm.

Liability and Trust – AI companies don’t want their models used to spread conspiracy theories or false claims. Erring on the side of skepticism protects them from backlash.

Data Limitations – AI models rely on the sources they were trained on. If those sources dismiss something as a hoax, the model will likely reflect that view—even if new evidence emerges later.

What This Means for You

If an AI confidently calls something a hoax, that doesn’t automatically mean it’s wrong—but it doesn’t always mean it’s 100% right, either.

Here’s what to do:

Check Multiple Sources – If a claim is controversial, compare AI’s response with reputable news sites, fact-checking organizations, and primary sources.

Consider the AI’s Incentives – If it has been designed to be extra cautious, it may avoid uncertainty by taking the safest stance possible.

Look for Nuance – AI tends to summarize complex issues in black-and-white terms. If a topic is debated, dig deeper to see why different sources disagree.

At the end of the day, AI is a tool, not a judge of truth. The best way to use it? Ask smart questions, verify information, and think critically.

How to Avoid Getting Misinformed by AI

Since AI chatbots don’t update their information in real-time, here are some ways to avoid taking outdated or incorrect information at face value:

Always Check the Model’s Cutoff Date. Before trusting an AI’s response on a recent topic, check when it was last trained. If it hasn’t been updated in over a year, take its answers with a grain of salt.

Use AI as a Starting Point, Not a Final Answer. If you’re researching a topic, think of AI as a brainstorming tool—not the ultimate source of truth. Use it to get context, then follow up with real sources.

If It Sounds Too Good (or Bad) to Be True, Double-Check. AI models sometimes fill in gaps with confident guesses. If something seems off, do a quick fact-check using reliable sources before assuming it’s accurate.

Ask AI Where It Got Its Information Some AI models can cite sources when pulling real-time data. If a claim sounds questionable, ask: “Where did you find this?” If it can’t cite a source, don’t assume it’s correct.

The Bottom Line

AI chatbots are incredible tools, but they’re not crystal balls. They don’t keep up with the latest news unless specifically designed to retrieve new information, and even then, they’re only as good as the sources they pull from.

So next time you ask an AI about a breaking news event, a brand-new discovery, or today’s stock prices, remember: it might be guessing.

Use AI wisely—fact-check, verify, and think critically. After all, the best tool is the one you know how to use well.

Some Fun Updates!

🚀 Plumb Public Beta is Coming!

Next week, Plumb (useplumb.com) is going into public beta! I can’t wait to see people using our flows and exploring new ways to integrate AI into their work. I’ll also be sharing how I personally use it to streamline my own AI-powered workflows.

One example of this is the Personal Book Recommendation workflow. Let me know if you want to try it out!

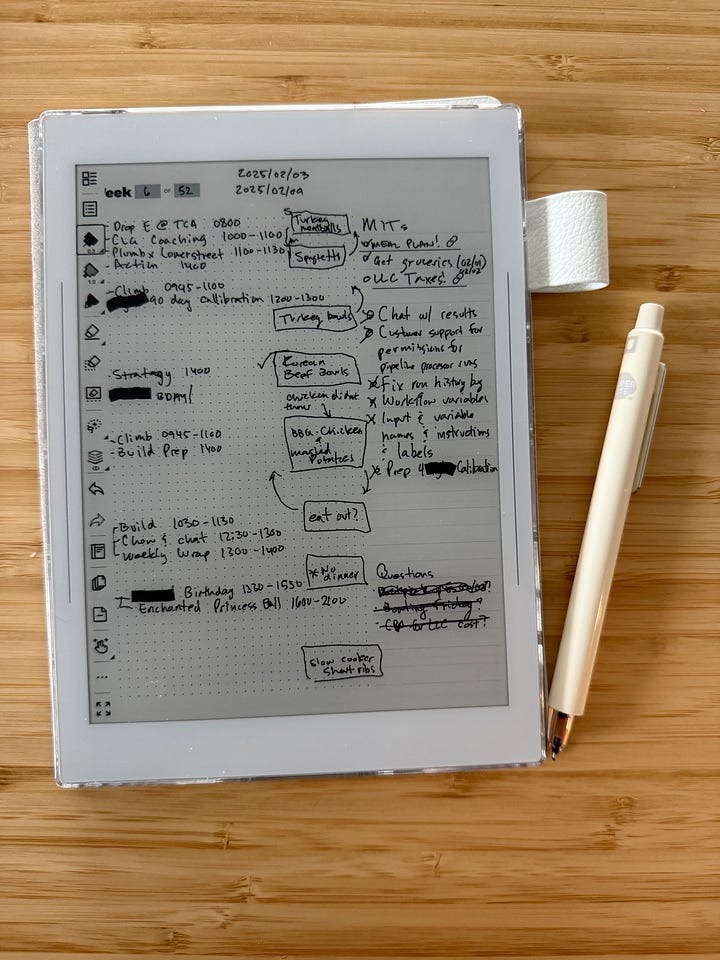

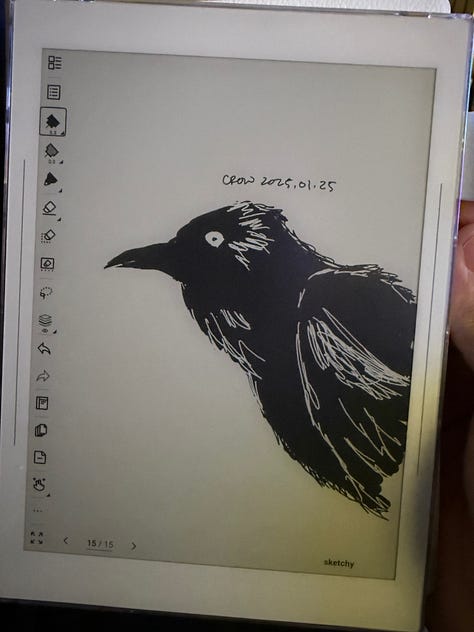

📝 Loving My Supernote Nomad

I’ve been using a Supernote Nomad, and I’m obsessed. It gives me everything I love about paper journaling—the tactile feel, the focus—but with the added power of digitization. It’s been a game-changer for organizing my thoughts, sketches, and ideas.

I also love reflecting on ideas with my Supernote Nomad. It gives me the freedom to sketch, write, and explore these thoughts in a way that feels tactile yet effortlessly digital.

You may have noticed some sketches in this issue, they were made on the Nomad!

If the Nomad is interesting to you, I’m starting to post about how I’m using it on my Youtube Channel!

Until next time, chase curiosity! ✨🤘

Chase