Hello fellow AI nerds!

The goal of this issue is to walk you through how I think about asking “is it true?” questions and trying to find as many cases as possible to understand whether data someone has given is accurate.

You know that moment when you’ve explained the same thing so many times you just want to build a tool to do it for you?

That’s exactly what happened to me with the whole “YAML vs JSON for LLMs” debate.

Here’s the thing: everyone assumes JSON is less “token efficient” because it looks has more characters especially if you test human-readable JSON.

So most people think, less visual noise, fewer curly braces, right?

But I kept thinking...does that actually translate to fewer tokens when an LLM processes it?

So I did what any curious person would do: I built an interactive benchmark to find out.

The Experiment

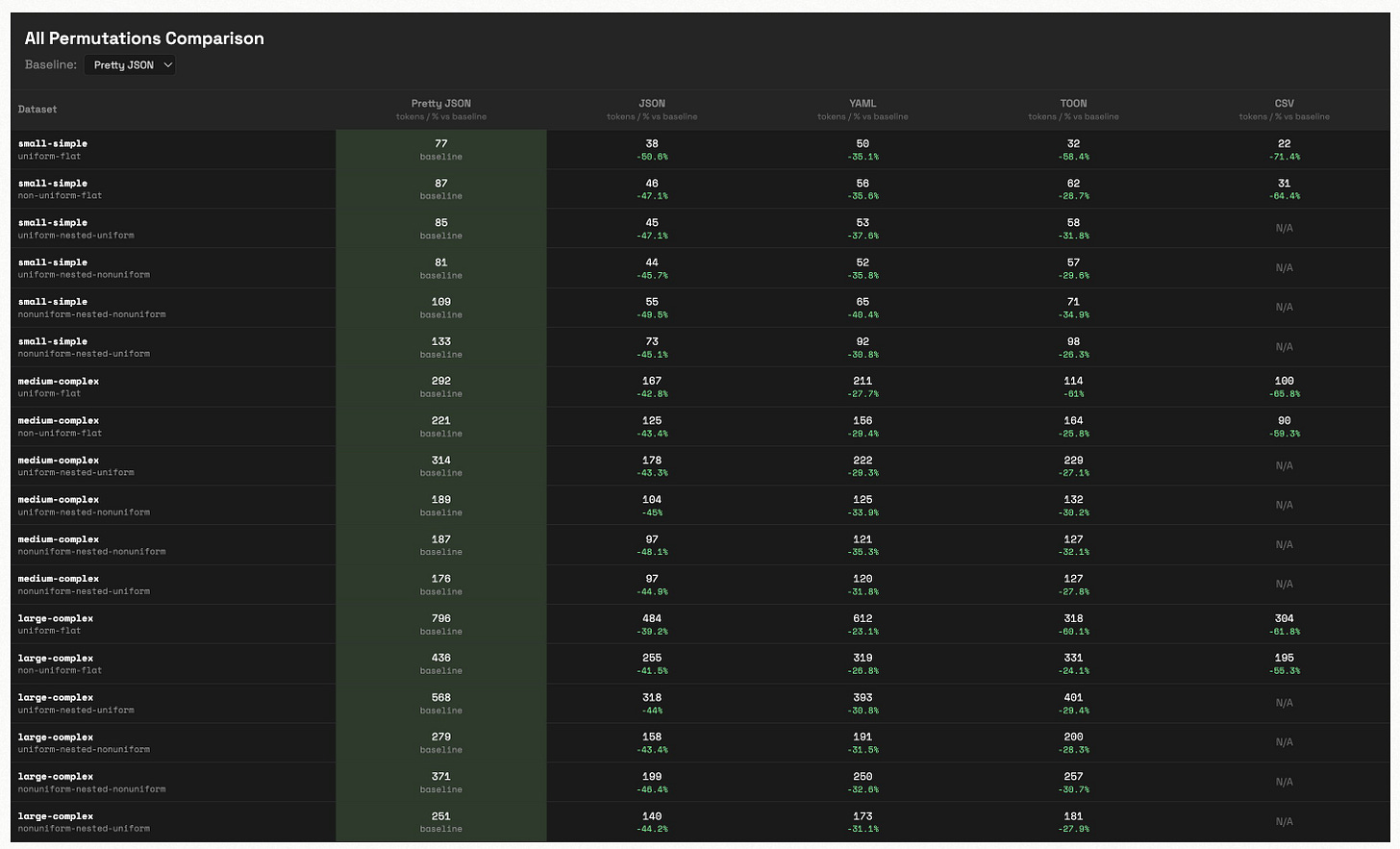

I took 21 different datasets (3 sizes, 7 different structures) and converted each one into five formats:

Pretty JSON (the readable kind with indentation)

Compressed JSON (no whitespace)

YAML

TOON (a compact format designed for LLMs)

CSV

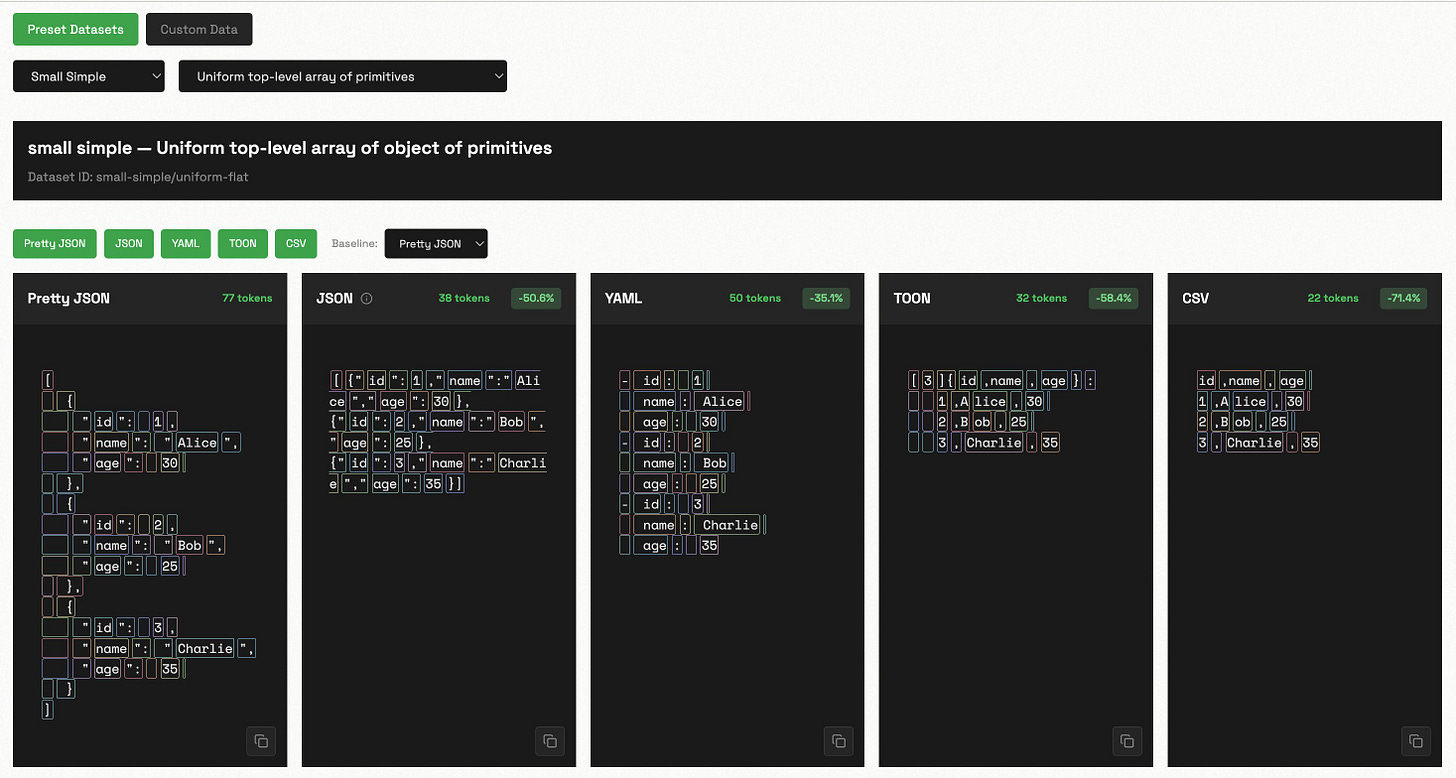

Then I tokenized everything using GPT-4’s tokenizer and built a visualization tool where you can hover over the actual tokens and see exactly how each character gets chunked.

What I Found (And Why It Matters)

Finding #1: Whitespace is expensive

Pretty JSON uses 60-90% more tokens than compressed JSON. That indentation adds up fast. This shouldn’t be surprising to anyone but what should be surprising is that people would use this as the JSON format to do apples to apples comparison.

Finding #2: YAML is actually worse than compressed JSON

Despite looking cleaner, YAML consistently uses 15-25% MORE tokens than compressed JSON. The colons, dashes, and YAML syntax markers all get tokenized.

Finding #3: TOON delivers real compression

TOON shows 20-35% token reduction vs compressed JSON but only when the data is uniform (when it is more efficient, it’s because it’s eliminating repeated keys and using positional encoding).

Finding #4: CSV dominates for flat structures

When your data is flat/uniform, CSV achieves 30-40% token savings. But it can’t handle nested objects (hence all the N/A).

Between JSON and CSV, you get all the benefits for the use cases where TOON underperforms JSON and where it outperforms JSON.

Why This Actually Matters

Here’s the thing: compressed JSON is your default winner for most real-world use cases.

YAML? It’s consistently 15-25% worse than compressed JSON. So if you’ve been reaching for YAML thinking it saves tokens, you’ve been doing the opposite.

The one exception: uniform flat data

If your data is uniform (same keys in every record) and flat (no nesting), CSV absolutely crushes everything with 30-40% token savings. That’s because it declares headers once and just lists values.

But the moment your data has:

Nested objects

Variable schemas (different keys per record)

Complex structures

CSV can’t handle it (notice all those N/A entries). You’re back to JSON or TOON.

TOON: a long way to go

TOON didn’t perform as well across the board. Maybe with some benchmarking it can become more effective. I also didn’t do any comparisons to actual results based on these formats and my hunch is that because TOON is not a format any model would have been trained on it might still perform worse in eval testing.

So what should you actually do?

Flat, uniform data (like database exports, simple lists): Use CSV

Complex/nested uniform data where token efficiency matters: Try TOON (but know your mileage may vary because it’s not a standard format)

Everything else: Compressed JSON is fine (and way better than YAML)

Human-readable data: Just don’t send it across the wire. You can pretty print before and after an LLM call but don’t send Human-readable structured data over the wire unless it’s contextually relevant (ie, you’re debugging some kind of config for YAML)

For RAG systems and LLM-heavy apps, this compounds at scale:

Cost: Fewer tokens = lower API bills

Latency: Smaller payloads = faster processing

Context windows: More room for actual prompts

A 10K token JSON payload becomes ~7K in TOON or ~6K in CSV (if your structure allows it). That’s real savings when you’re processing thousands of requests.

Try It Yourself

I built the whole thing as an interactive playground. You can:

Toggle between formats

See token-by-token breakdown with colored highlighting

Switch baseline comparisons

Test different dataset structures

Watch the token counts change in real-time

The tool uses tiktoken (the actual GPT-4 tokenizer) and processes everything server-side in Next.js. I tested it with simple flat arrays, deeply nested objects, uniform data, chaotic data... all the real-world messiness.

What’s Your Experience?

Have you run into context window limits? Tried different formats? I’d love to hear what you’ve discovered.

Reply to this email or find me on [your preferred platform]. Let’s geek out about this together.

Chase

P.S. - I originally built this just to have a URL I could share when people asked “but what about YAML?” Now I’m kind of obsessed with finding other ways data format impacts LLM performance.

If you have ideas for what to test next, let me know.