Four Flavors of AI—and When Each Tastes Like Failure

Chat. Agent. Workflow. Copilot. Each solves a different class of problem—here’s how to tell them apart.

This issue focuses on AI Concepts, unpacking the core ideas, frameworks, and principles that guide how we design and use AI.

Not all AI is created equal—and more importantly, not all interactions with AI are.

If you’re building, automating, or consulting on AI-powered systems, it’s critical to choose the right modality for the task.

Here’s the core idea: There are four major ways we interact with LLMs—each with different strengths, tradeoffs, and use cases.

Let’s break them down.

Four Flavors of AI

Chat

The most familiar is chat—freeform, natural language conversations like using ChatGPT.

It’s fast, flexible, and perfect for ideation, exploration, or casual problem-solving.

But it’s also unstructured, ephemeral, and tough to automate reliably.

Great for when you need a sounding board, not a system.

When It Fails

You give a chat interface a complex, multi-step task—summarize a report, categorize feedback, write follow-up emails based on user profiles.

You tweak the prompt, hit “regenerate” three times, copy-paste pieces into another tool, and eventually give up because it can’t remember what you said two messages ago.

What it tastes like:

Frustratingly inconsistent outputs.

Endless trial and error.

Zero audit trail.

A “ghostwriter” with amnesia.

Why it fails: Chat is great for ideation, not execution. It’s a sandbox, not a system.

Agents

Next is the agent. This is where the AI becomes more autonomous—able to pursue a goal, make decisions, use tools, and adapt.

You give it an objective, and it figures out the steps.

Think of it like a junior analyst with a toolkit and a mission.

Agents are powerful for complex, uncertain tasks—but they’re the AI equivalent of a slot machine.

Agents are harder to debug, more opaque, and can easily go off the rails without careful guardrails.

When It Fails

You deploy an agent to scrape data, write a summary, generate a chart, send an email, and schedule a follow-up—all on its own.

Halfway through, it hits an ambiguous error, takes a wrong turn, and sends a half-written message to your client. Oops.

What it tastes like:

Black-box decisions.

Hallucinated outputs.

Surprise side effects.

Debugging spaghetti.

Why it fails: Without tight control or clear constraints, agents drift, loop, or overreach. They’re juniors without adult supervision.

Agentic Workflows

Speaking of guard rails, an agentic workflow gives you structure and control.

Rather than handing over full autonomy, you orchestrate a series of well-defined steps, some of which may use LLMs.

It’s less “AI, go do this” and more “here’s the recipe—help with Step 3.”

This modality is perfect for business automation, high-reliability tasks, and modular systems.

It’s what most AI creators and builders end up building when they care about shipping things that actually work consistently and deterministically.

When It Fails

You build a workflow with perfect logic: input, analysis, categorization, generation, QA.

But when real data hits, Step 3 breaks due to weird edge cases, Step 4 returns junk because Step 2 was a little off, and now you're duct-taping fallback logic at 11 p.m.

What it tastes like:

Fragile logic chains.

Debugging nested prompt templates.

A “modular” system that’s 80% exception handling.

Why it fails: You’ve added structure—but brittle, hardcoded structure. Without flexibility or graceful error handling, it collapses under real-world messiness.

Copilots

Finally, there’s the copilot: an LLM embedded inside an interface, assisting users in context—like GitHub Copilot, Notion AI, or the “help me write” button in Gmail.

These are scoped, productized helpers that augment human workflows inside the tools we already use.

They don’t operate independently, but they feel like a superpower when designed well.

When It Fails

YYou’re in the middle of a task—writing an email, editing a doc, reviewing code—and the Copilot is just there. You click it once, get a useless suggestion, never touch it again. Or worse: you don’t even notice it exists. It’s the AI equivalent of a treadmill in your garage—technically powerful, functionally unused.

What it tastes like:

“That button? I tried it once.”

Slow, irrelevant, or awkward help.

Distracting more than assisting.

A tool that’s never in sync with your intent.

Why it fails: Copilots succeed when they show up at the exact right moment, in a way that feels intuitive and helpful. When they interrupt flow, offer shallow suggestions, or sit idle waiting to be summoned, users simply stop caring.

Use the Right Tool for the Task

Here’s a quick reference to help you decide which modality fits your need:

The two important things you can do is to start stupidly small and notice when an action can be operationalized.

You’re probably using something like ChatGPT or Claude for tasks today.

Which tasks do you repeat? How easy is it to turn them into a process? What intelligence needs to be described for that process to be able to be used in an agentic workflow or an agent? Which tasks are uniquely qualified for exploration?

You’re not just choosing an LLM—you’re choosing how to use it.

Modality is how we shape the intelligence we’re using and as you learn the limitations of each modality you learn which one to pick for the moment.

The power in using AI well is picking the right tool for the right task.

So next time you reach for ChatGPT, ask yourself: Am I holding a hammer, or just staring at a nail?

Field Notes

This is the part of the newsletter where I share tools, updates, experiments, and anything else worth passing on.

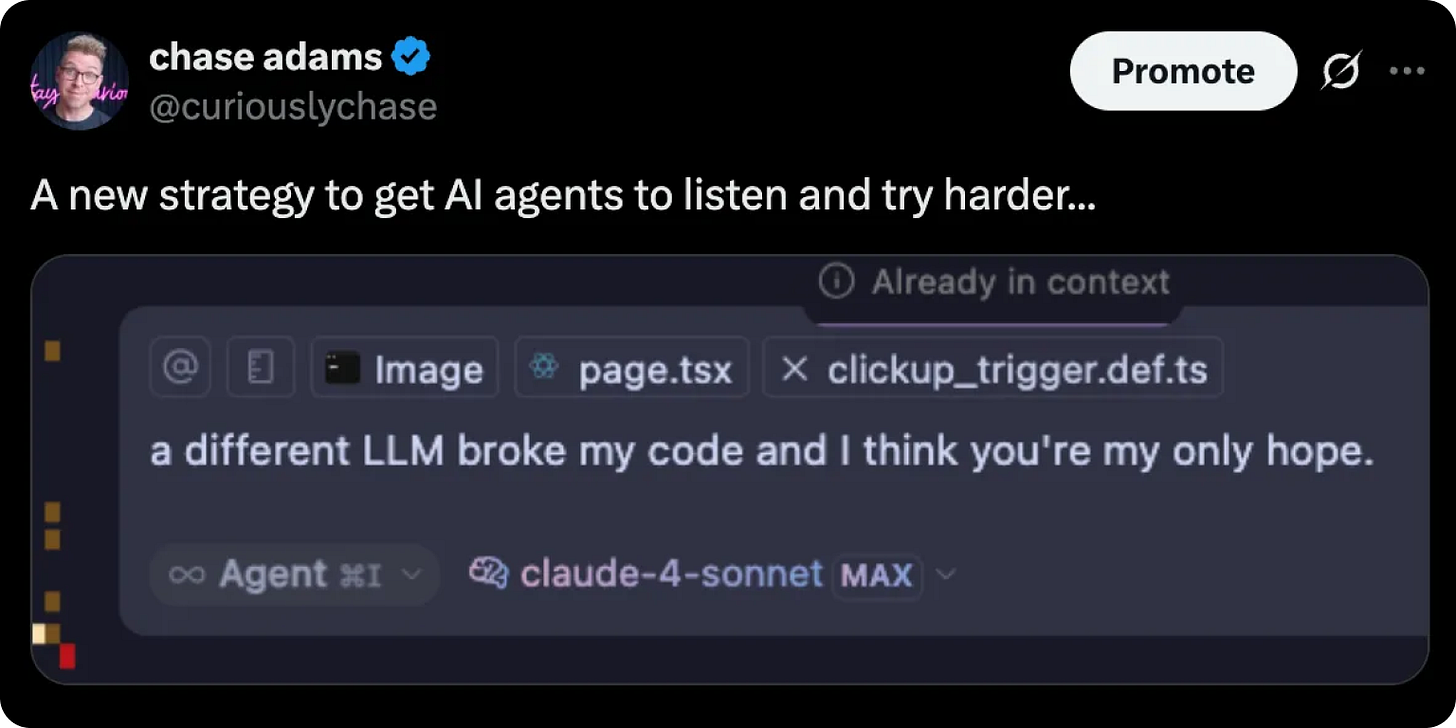

AI Agents are all the rage, but I believe agent winter is coming. In the meantime, when I’m using agents to write code, I’m trying something new (tweet). 🤪

Pulled from the Stack

Every week, I pull one tool from my personal or professional stack—something I’m using—and give it a quick spotlight.

Recently our engineering team started using CodeRabbit. It isn’t just another code review bot—it’s the most thoughtful AI pair reviewer I’ve seen.

It drops real review suggestions on your PRs—concise, specific, and often insightful enough to spark refactoring or a second thought.

It adds a smart summary of the PR to your first comment, which means your teammates can grok the gist without digging.

It even generates a sequence diagram showing what changed in your implementation—pure gold for visual thinkers or systems-level folks.

And it even writes you a little poem based on the changes in the comments.

And yes, it actually makes you want to read your own pull requests. Check it out!

Recent NYC Trip

Every quarter I go to NYC to meet with the Plumb board. Aaron (my co-founder) is always a wonderful host and this time was no different.

We walked out of our board meeting with lots of energy and great insights about how to continue to tune our business.

As for recreation, this trip I got to see Hamilton and visit Trinity Church (where the Hamilton family is buried).

It was sobering to visit places that are such an important part of the United States history. I was thankful to have a chance to experience the mix of our culture and history.

Want to Support Me?

I’ve had a few people ask if I’ve considered having subscriptions on my newsletter (Thank you! 🙏).

If you really want to support me, check out Plumb or send it to someone who you think would dig it.

If you’re building agentic workflows, automations, or AI tools and want to share them with the world—Plumb was made for you.

We’re building the easiest way to productize, promote, and monetize your AI-powered workflows. No infra headaches. No weird licensing hacks. Just a clean path from “this is useful” to “this is live and making money.”

👉 Curious? Come check us out at useplumb.com. We’d love to show you what we’re building—and help you ship something people can use.

May your agents stay on task and your outputs stay weird.

✨🤘Chase